Last modified 2001-04-24 - Jarmo Mäki

This document is a testing plan for the Monrovia project ordered by Mgine Technologies (formerly known as Done Wireless Oy). It describes how the testing is done.

Monrovia is a multi-player, turn based game platform for Palm clients. Its purpose is to show whether it is possible to do this kind of a system.

The required hardware for testing the different releases of the game

is the Monrovia server at Mgine and a standard PC with 100Mb of hard disk

space available and reasonable equipment. The PC should have access to

the Internet.

The Monrovia server is described in the Technical Specification document.

A handheld Palm computer is especially needed in the system tests.

The operating system of the server is Linux Redhat 6.2. There should be an SSH daemon running on the server and J2SE libraries installed. The software parts being tested are copied to ~/hayabusa directory and can be accessed from there.

A standard PC is required when doing tests using the Palm emulator.

The operating system of the PC is Windows 95/98/2000/NT.

The PC requires an SSH client program to estabilish the terminal

connection to the server. The user copies the client software including

the Palm OS Emulator version 3.0a8 from the Monrovia server. If the

emulator included in the Sun KVM distribution is used, it must be

installed in the workstation.

We use ssh-authentication to connect to the Monrovia server. Workstations which are used to test the game are mostly our computers at home and they don't have public access. The server is backed up on tape every day and if something vital is lost it can be restored from tape.

Test personnel uses a test report to log test performances. The purpose

of the test report is to collect precise information about the test cases,

when the tests have been carried out, who performed the tests, how long it

took to perform the tests, what the result of the tests is and if the test

is passed or not.

The map which is generated for the test can be found in

~/hayabusa/monrovia/game/data.

Tools provided by the course, such as Burana and Tirana, are used to report

errors and working hours.

Persons who run the test cases are members of our group. The final version of the game will also be tested by employees of Mgine. Mgine employees perform only functional and usability tests.

The test personnel is divided up into code writers and system testers.

Code writers test that their written code is correct. They are responsible for module testing as described in chapter 4. Code writers also perform integration tests.

There is a dedicated test group to test the whole system as described in chapter 4. The system testers test that the game works as described in the requirements specification.

Members of our group don't require any special training to perform the test cases. Employees of Mgine will be explained carefully what they should do and if technical training is needed then such will be provided within the bounds of our resources.

The integration testing is aimed to test the server/protocol interoperability with the client/protocol interface. If required then also the integration of Monrovia components on demonstration equipment will be tested.

The system test's objective is to ensure that all required functions are

working properly and that the GUI can be easily adopted. The system testing

includes also performance testing and usability testing.

Functional testing is divided into two categories. The test cases are described with more detail in the test report.

The system testers test that the game can be played by 10 players at the

same time.

The effect of connection breaks should be tested. This means that the test

persons test what happens when

The following requirements will be tested by system testers:

The performance of the server will be tested. This means that the game should be running smoothly without any major lag. The perfomance of the server can be easily tested by making simple tests locally on the server and by using the GUI. Testing the server includes checking how much memory, processor and hard disk space is used.

The capacity of the client will be tested. These tests assure that the system doesn't overload the Palm.

Results from module, integration and system testing are gathered in a

formal style into the test report.

The Test Report and Burana are the most important sources of information for

code writers when they fix errors and therefore both should be filled out

with care.

The logs of the server will be stored in a safe place if code writers need to

examine them later.

Many tests need to be done several times and therefore approximately 10 hours of resources need to be allocated for testing for each phase. This does not include resources needed to make test cases.

In the system tests we use grades to indicate how well a test was passed:

A+:The tested part of the system worked perfectly

A:The tested part of the system passed the test without any major problems

A-:The tested part of the system worked as specified in the requirements

specification, but there was something that didn't please the test person.

B:The tested part failed. The problem is minor and the fix can wait.

C:The test part failed. There is a major problem and it needs to be fixed as

soon as possible.

When performing system tests the tester is supposed to perform tests

which are listed in the system test part of the test report. When he has

finished with the tests listed in the report he will spontaneously test

things he thinks are important. The idea is to first make sure that all

tests which have been created beforehand are tested and then let the test

person try things out by himself.

If a test case fails it is very important that the test person knows how to

repeat the operation which caused the failure. Tests are repeated a couple of

times so that random functionality will also be detected.

The testing covers so much that the current version can be released without any major problems. Minor problems which only hinder, but don't harm the demonstration of the demos are acceptable. The final version should not have any bugs or errors left. Our goal is to have only A+ grades in the system test part when the final version is tested.

The required personnel to perform the system tests are two members of

the Hayabusa group who have not participated in creating the game and one

person of the Hayabusa group who is familiar with the system.

All versions should be tested for the first time at least two weeks before

they are demonstrated so that there is enough time to make fixes.

The testing of application related parts is done before writing any code

to prevent any unnecessary code from being written.

The following application related parts are tested:

The functionality, ergonomics and clarity of the GUI will be tested as explained in chapter 4.3.1.

The recovery of the GUI will be tested as explained in chapter 4.3.1 and in the test report.

The performance of the Palm will not be tested until the end of T3.

The performance of the server will be tested as described in chapters 4.3.2

and in the test report.

The performance of the emulator has been tested earlier and it is known to

work properly.

If parts of the system are updated then a regression test will be run to ensure that other parts of the system are still working properly. The regression test ensures that the whole system works properly. There is no regression test written at the moment and the test personnel have to co-operate with the code writers to figure out what must be tested again.

This chapter defines the criteria to pass and fail a test.

A system test case is passed if it gets at least grade A-. So, to pass the

whole system testing all test cases must get at least grade A-.

Module and integraton test cases are passed if the test case is graded as

"passed". Module and integration tests are passed, if all test cases are

passed.

If all tests are passed, then it is possible to come out with some kind of a

release.

A system test case fails if it gets grade B or C. If one system test case fails, then the whole system testing also fails. Integration and module test cases fail, if they are graded as "failed". If one module or integration test case fails, then also the whole module or intergration testing fails.

A system test will be interrupted if an error, which causes immediate

interference to the system, has been found. For example if system tests

cannot be continued before the error has been fixed then the system test will

be interrupted. Interrupting system test requires the approval of the project

manager.

In case of an unexpected event the project manager can interrupt a test based

on his judgement.

After an error has been fixed the project manager decides if the test should be started from the beginning or if the test can be continued from where it was interrupted. It is also possible to start the test from the beginning and do only test cases which are thought to be affected by the fix.

A test is finished when all test cases have been tested.

In case of an unexpected event the project manager can end a test based on

his judgement.

The test plan uses the same methods as described in Project Plan.

The testing completes in time.

The demo matches customerís requirements.

Too many bugs found and no time to fix them

The work is destroyed (described in the project plan)

Test cases are too complicated to be performed

The GUI is never completed

Some of the tests fail and therefore other tests cannot be executed

Unrealistic testing schedule

Problems in the development environment

Defined tests cannot be executed

Teaching test persons takes too long

Key person becomes mad/quits/leaves/becomes addicted to alcohol

Communication problems inside the group

Critical part of software is delayed and another has to wait until it

is finished

The test plan is clear enough but the test persons don't know what to test

The Risk scenarios can be found in appendix A

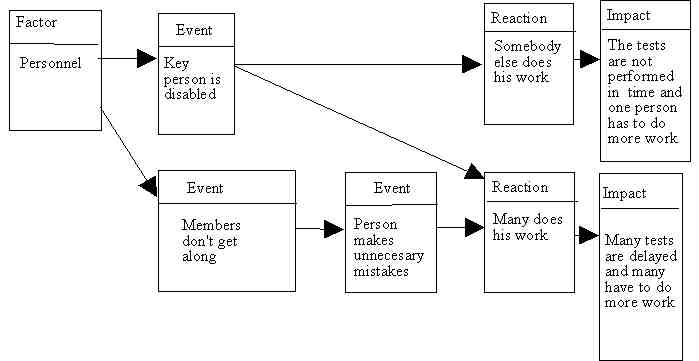

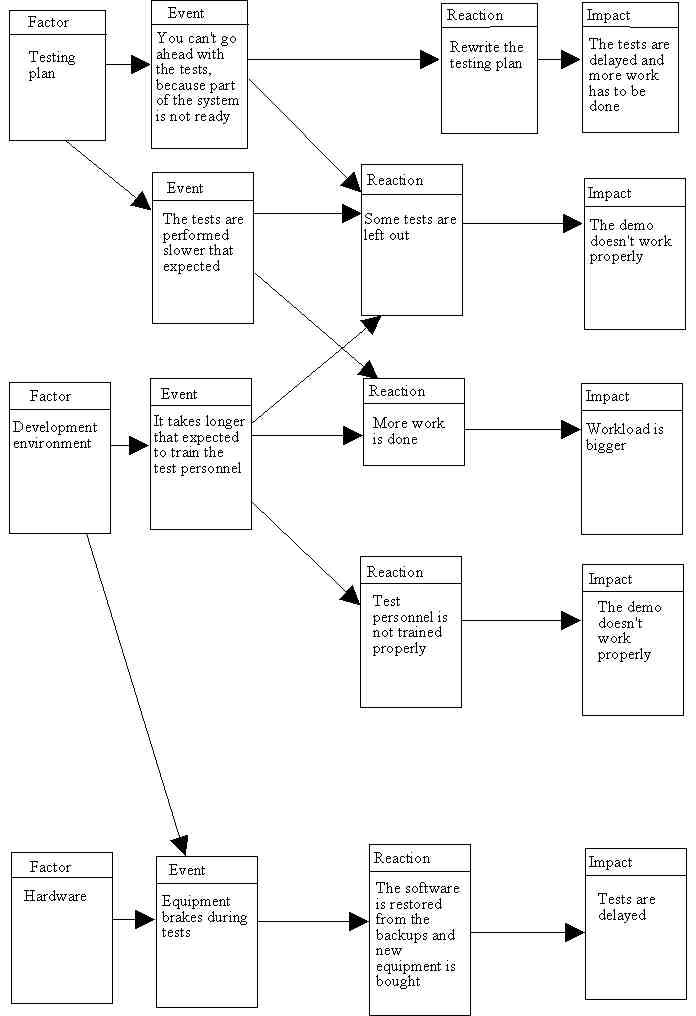

Summary of the risk scenarios:

Scenario 1: If a key person becomes disabled, somebody else has to do his

work.

Scenario 2: If members don't get along, communication is lousy and

unnecessary mistakes are made then somebody has to fix the problems.

Scenario 3: If part of the system is not ready, then you can't go ahead

with some tests and one solution is to make changes to the testing plan.

Scenario 4: If part of the system is not ready, then you can't go ahead

with some tests and one solution is leave some tests out.

Scenario 5: If tests are performed slower than expected then one solution

is to leave some tests out.

Scenario 6: If tests are performed slower than expected then one solution

is to work harder.

Scenario 7: If it takes longer than expected to train the test personnel

then more work has to be done.

Scenario 8: If it takes longer than expected to train the test personnel

then the test personnel is not trained properly

Scenario 9: If equipment breaks down during tests the software is (if

needed) restored from backups and new equipment is bought.

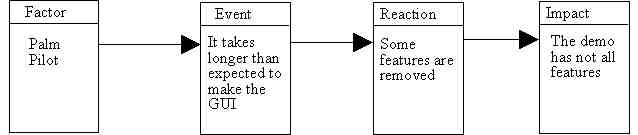

Scenario 10: If it takes longer than expected to make the GUI for the

demo, we will have to leave some features out.

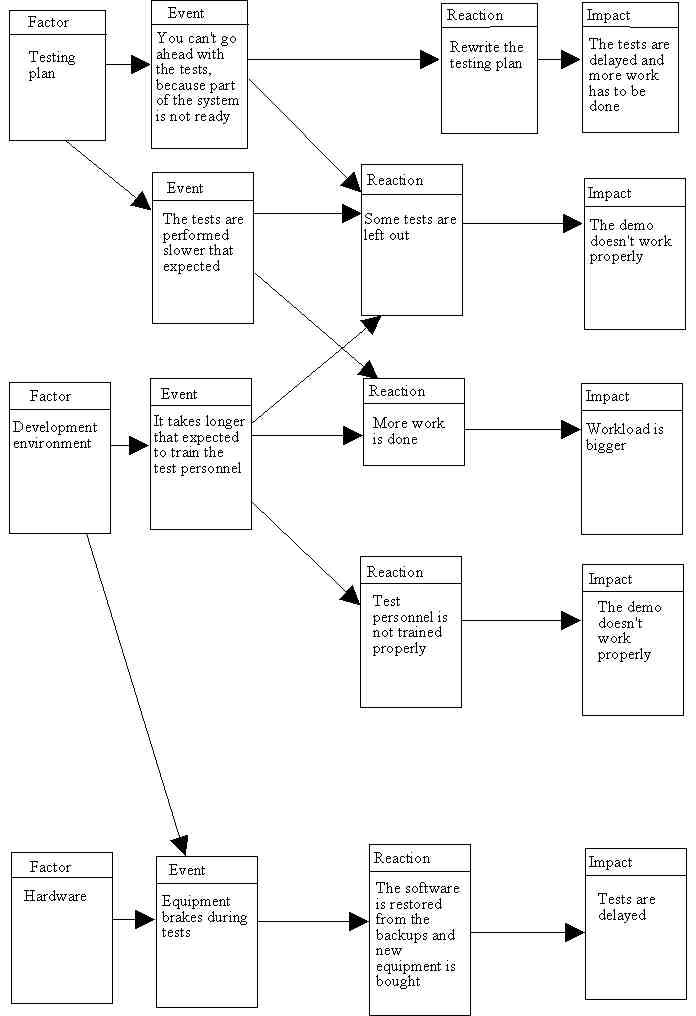

How likely and critical the scenario situations are:

We choose the worst cases from the table and analyze them. Then we'll

make backup plans in case the risks happen.

Scenarios 2, 3, 4 and 10 seem to be the worst cases.

Scenario 2:

The members of the team cannot be changed anymore and therefore it

is very important that everybody tries to make the communication between

team members successful. Plans need to be very accurate.

Scenarios 3,4:

If time is available members should try to do things beforehand or

try to help other members of the team to do their job.

Scenario 10:

Everybody should try to help code writers do their job. The GUI is

the most important part of the demonstration and everybody should understand

that if there is a problem someone cannot solve then we have to help him.

It seems to be likely that some part of the system will be delayed and others have to wait until it has been completed. There are some solutions shown above but the fact is that if we want to stay on schedule we have to work hard.

The passing of the tests is approved as follows:

Project manager Juha Vainio approves the final version to be released.