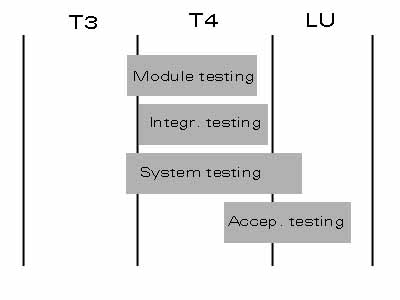

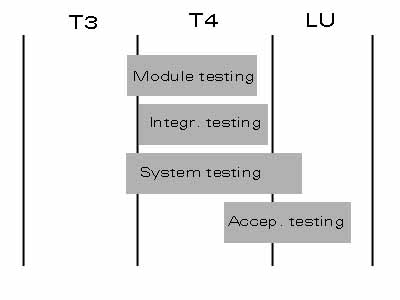

Picture 2. Testing effort scheduling.

| Author(s): | Eero Tuomikoski, Risto Sarvas |

| Version: | 3.0 |

| Phase: | T4 |

| Latest update: | 20.3.2001 |

| Document state: | Published |

| Inspected by: | |

| Inspection date: |

Acceptance testing measures the goals of the whole project described in the Project Plan [PROJPLAN] and the Requirement Specification [REQSPEC]. These high level targets are set by the customer. Therefore, the acceptance testing must be considered more from the customer point of view, as a final approval for the quality of the delivered product. One of the goals is the comprehensive documentation due to the research-oriented nature of the project. The acceptance testing also requires that the results of system testing must be presented as one criteria for successful exit of the project.

The acceptance test plan must be inspected by the customer in order to agree the common goals with the project team. Also the actual testing at the end of the project may be performed together with a representative of the customer, if that is seen feasible. This is decided by the customer. The functional requirements stated in the Requirement Specification [REQSPEC] and revised in the Functional Specification [FUNCSPEC] are not targets of acceptance testing. The testing of these features is covered in detail by the system testing.

The purpose of the system testing is to let the customers and users to check that the system fulfills their actual needs documented in the Requirement Specification [REQSPEC]. It concentrates on the non-functional requirements like installability, usability, maintainability, modularity and documentation of the system. For all these features, it is characteristic that it is challenging to find measurable key factors.

The system testing is performed as black-box testing. In this approach, it is assumed that the implementation details of the system are hidden and unknown to the tester(s). Testing concentrates on the interfaces of the system, especially to its externally observable functionality and input-output behavior. In practice, this means the tester taking the roles of the end user and the system operator.

The end product of this project consist of an architecture for streaming media proxy and a prototype that implements a set of the key nodes in that architecture. A major contribution is the project documentation: it defines the end user scenarios and use cases, requirements, functional and technical specifications, test plans and reports. Due to the research-oriented approach of the project, the quality of this documentation is considered essential. The design decisions and the rejected alternatives for those must be reasoned and documented. In other words, there is more emphasis on the documentation than the actual binary code program developed. This emphasis is required by the customer.

The intended audience of this document is the customer, course personnel and project members.

Burana is used for error reporting when the project team finds it feasible. The target is set to start using it at the beginning of phase T4.

Phase T4: considering the minimal benefits and additional bureaucracy involved, the team decided not to use Burana software for error reporting. Instead, information is stored in test reports as described in chapter 4.2 'Defect handing'.

Netscape browser (4.0 or higher) is used as a client software running on Win98/WinNT operating system. Also a media player supporting mpeg and avi formats (e.g. RealPlayer or Windows Media Player) is required.

CTC++ code coverage tool may be used, if white-box testing method is used. This is decided when planning testing for each phase individually.

Phase T4: All intergration and module tests were automated. See chapters 7 and 8 for details.

No other training is seen necessary at this stage. If new testing tools are introduced, more training may be scheduled if needed.

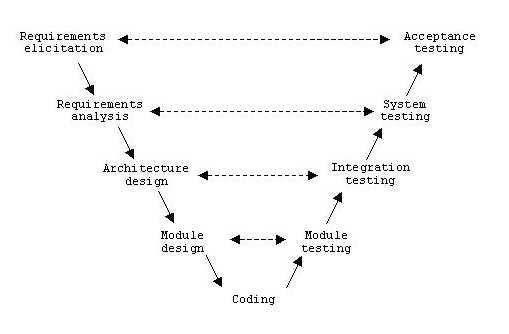

At the bottom, testing concentrates on the individual modules and their functionality, at the level of source code. The modules are developed independently of each other and can therefore be tested separately. Modules are not stand-alone programs, so special software must be created to test these. Often, these are side-products of the implementation. Modules are tested against their design documents. Only the critical modules are tested in this project. These are defined in each phase separately. The end product of the module testing is the corresponding test report.

Integration testing seeks to combine the different modules in to a single subsystem and verify the correctness of their interoperability. The focus is on their interfaces and co-operation. Even if components work independently, new defects may be revealed when they are put together. Integration testing can be performed in two ways: by assembling all the modules together for execution, or by composing the system with a bottom-up approach. The latter, incremental way of organizing integration testing is preferred in this project, since it is easier to locate errors in smaller subsystems. Integration testing relies on the functional specification and technical documentation, concentrating on the architecture point of view. In this project, integration testing tests the SMOXY excluding clients and other external components, and the end product is a summarizing report.

At the system testing level, the whole system is tested. The entry criteria for it is that most of the software is already covered in testing of the previous phases. System testing looks after issues which may be overseen in the previous software-intensive testing levels. Typically, these consist of parameters like poor overall performance, capacity requirements, configuration testing and recovery capability in error situations. These are the mostly the non-functional requirements described in requirements specification, but part of them is also traceable in the functional specification. Going beyond SMOXY, the system testing includes external components, like the clients, to the scope. Executed system testing is one of the requirements of the acceptance testing.

In this project, the acceptance testing verifies the high level project goals. The weight is on the customer point of view. The scope of the acceptance testing was already discussed in the beginning of this document.

Black-box testing is preferred over white-box testing. The concept of black-box testing is capsulated with well-know functionality of the tested object. In other words, the system is started with some input x, the system's output f(x) is measured and compared against the predefined, specified output y. If f(x) = y, the system has passed the test.

If it is felt necessary, the project may perform white-box testing of certain key blocks of the code. The idea of going through the all the software at source code level is felt too exhausting effort with the current resources of the project team, so it will be done on selected areas if so decided later.

The white-box testing is partially covered by selected inspections of the source code. Code inspections are arranged for the critical parts of the software. These are identified in each phase separately.

The following template is used to report any irregularities while running system, integration or module test cases. The information needed to solve or abandon error report is enclosed together with the original report.

| Date | Test identifier | Status | Priority | Tester |

| [yyyy-mm-dd] | [Unique test case id] | [OK,NOK] | [H,M,L] | [Tester id] |

|

Problem |

[Write here how the problem occurred, what was it, is it possible to repeat it, anything special in the environment, or is there something wrong with the test case.] |

|||

|

Fix date |

[yyyy-mm-dd] |

Fixed by |

[Guru id] |

|

|

Analysis |

[Describe the actual problem in detail. How it was fixed? Was is not a problem? If the fixing is not done, explain why? Problem with the 3rd party software?] | |||

Picture 2. Testing effort scheduling.

Starting from the bottom of the V-model, module testing is planned and performed by the persons who develop the corresponding source code. This is feasible because these are the persons who has most knowledge of the required functionality. On the other hand, it violates the basic assumption: testing must be done independently, since the programmers are too biased by their solutions and blind to their errors. However, in this project there are no resources to execute the external testing on modules. Like at every testing levels, it must be documented what was tested and what where the results. Module testing is started at the end of project phase T3, when first source code blocks are created, and it continues until the last component is finished in phase T4. In this project, module testing is the responsibility of developers of the modules.

Integration testing starts during the phase T4 when there is available module tested components. For this, the persons testing the software are different from those developing it, if the division of work allows that. It is very beneficial to cycle developers to test each other's code, since they have the needed experience of the structure of the software. Full time testers often lack this information, especially if the knowledge transfer has not fully taken place yet. The integration testing is an incremental process starting with the basic skeleton of the software and ending up with the full system. Therefore, it lasts until the end of the last implementation phase T4, and is performed by a person responsible for integration testing (to be named).

For system testing, the primary resources are the persons not so heavily involved with the source code development. By having a bit more external to point of view, they don't bear the weight of ownership. The use of testing resources outside the project could be achieved by temporarily exchanging persons with another project group. The feasibility of this idea is studied. System testing planning was started at the end of phase T2, while the actual system testing is performed on phase T4, with rescheduling overhead to phase LU. System testing is the responsibility of the system tester (to be named).

The acceptance testing involves also the customer in the delivery phase, LU. It is in the customer's interest to oversee the final testing rounds in order to familiarize himself with the end product and to evaluate the quality of the product and, more importantly, the quality of the project documentation. This includes estimating the deepness, level of research and value for the customer in documentation. In practice, the acceptance testing starts already at the end of phase T4 and ends few weeks before the end of the whole project.

At the end of the phase T2, the project presents the first demo of the software. A separate design document discusses also the testing aspects of the demo [DEMODOC].

The need for code inspections is evaluated in each phase separately. For more detailed resource allocation in testing, the project plan is revised in the beginning of each phase to describe the division of work.

The system test cases are defined in such a detail that it does not require a tremendous effort to execute them without deeper knowledge of how the SMOXY system performs. This is done bearing in mind the customer's need to verify the correctness of the system. Their second purpose is to facilitate the external testing effort performed by the opponent group in the beginning of the phase LU. System tests are human labor intensive due to the low level of automation.

System testing does not attempt to solve defects that are encountered in third-party software used in testing.

Installation is not tested due to the unavailability of implementation.

This is scheduled for the last phase, LU. Therefore, the tester must be

provided with a preinstalled version of SMOXY.

| System test parameters | |

| CLIENT | Netscape 4 or higher [browser] |

| SMOXY | [ip] |

| ADMIN_ACCOUNT | [id:passwd] |

| FRONTDOOR | [ip:port] |

| DIRECTUI | n/a [url] |

| PORTAL | n/a [url] |

| CONF_UTIL | [web pages] |

| USER ACCOUNT | [id:passwd] |

| LOGS | [collection of files] |

| SMALL_STREAM | [url] |

| MEDIUM_STREAM | [url] |

| LARGE_STREAM | [url] |

| COLOR_STREAM | [url] |

| INCORRECT_STREAM | [url] |

| AVI_STREAM | [url] |

|

|

|

|

|

| SYSTEM | TEST-ADMIN-01 | Medium | FUNC-ADMIN-01 |

|

|

Start SMOXY. |

||

|

Log in the [SMOXY] host with [ADMIN_ACCOUNT]. Enter commands 'smoxy.x' and 'proxyd.x localhost 6999 ~/usr/db' in different windows. (Stopping SMOXY requires entering CTRL-C for these windows.) |

|||

|

SMOXY has started. [LOGS] show timestamps for this event. |

|||

|

|

|

|

|

| SYSTEM | TEST-PROFILE-01 | Medium | FUNC-PORTAL-03 |

|

|

The user configures his profile in SMOXY. Changes are applied by the SMOXY system. |

||

|

Go to [CONF_UTIL]. Enter your [USER_ACCOUNT] information. Define the client to have a black-and-white display. Save the modification. Reload your profile. |

|||

|

The profile must contain the modification on the second loading. |

|||

|

|

|

|

|

| SYSTEM | TEST-PROFILE-02 | Medium | FUNC-PORTAL-03 |

|

|

The user tries to make a non-permitted change in his profile in SMOXY. Changes are not applied by the SMOXY system. |

||

|

Go to [CONF_UTIL]. Enter your [USER_ACCOUNT] information. Select non-permitted combination of filters. Save the modification. |

|||

|

The system must not allow the modification to be applied. |

|||

|

|

|

|

|

| SYSTEM | TEST-ASPROXY-01 | Low | FUNC-PORTAL-02 |

|

|

SMOXY must require the user to authenticate himself. With correct user id/password combination, SMOXY returns the requested page. |

||

|

Configure your [CLIENT] to use proxy settings [FRONTDOOR]. Enter [SMALL_STREAM] to location field in your [CLIENT]. SMOXY returns with a proxy authentication window. Enter [USER_ACCOUNT] parameters. |

|||

|

Access to the SMOXY system is granted and the requested page is returned. [LOGS] show that user was identified. |

|||

|

|

|

|

|

| SYSTEM | TEST-ASPROXY-02 | Low | FUNC-PORTAL-02 |

|

|

SMOXY must require the user to authenticate himself. With incorrect user id/password combination, the usage of SMOXY is denied. |

||

|

Verify that your [CLIENT] uses proxy settings [FRONTDOOR]. Connect to [SMALL_STREAM]. SMOXY returns with a proxy authentication window. Enter an incorrect password for [USER_ACCOUNT]. |

|||

|

Access to the SMOXY system is not granted. [LOGS] show that user was not identified. |

|||

|

|

|

|

|

| SYSTEM | TEST-ASPROXY-03 | High | FUNC-PORTAL-01 |

|

|

SMOXY is configured to be used as a proxy and a video stream is fetched. |

||

|

Verify that your [CLIENT] uses proxy settings [FRONTDOOR]. Enter [SMALL_STREAM] to your [CLIENT]. SMOXY returns with a proxy authentication window. Enter [USER_ACCOUNT] parameters. |

|||

|

The video stream is delivered, and it is displayed. [LOGS] show that stream was delivered through SMOXY. The size of the received file must be the same as the original. |

|||

|

|

|

|

|

| SYSTEM | TEST-FRONTDOOR-01 | High | n/a |

|

|

Recovery from a non-existent server. |

||

|

Connect to an url that points to any server, which does not exist. |

|||

|

A proper error message is displayed in the client. |

|||

|

|

|

|

|

| SYSTEM | TEST-FRONTDOOR-02 | High | n/a |

|

|

Request cancelled by the user. SMOXY system performs a proper cleanup. |

||

|

Connect to fetch [SMALL_STREAM]. During the download, cancel action by stopping or shutting down the client. |

|||

|

SMOXY must recognize loosing the client. Further connections are accepted and processed without interference. |

|||

|

|

|

|

|

| SYSTEM | TEST-FRONTDOOR-03 | High | n/a |

|

|

Fetching a medium-sized video stream. |

||

|

Verify that your [CLIENT] uses proxy settings [FRONTDOOR]. Enter [MEDIUM_STREAM] location to your [CLIENT]. SMOXY returns with a proxy authentication window. Enter [USER_ACCOUNT] parameters. |

|||

|

The video stream is delivered, and it is displayed. [LOGS] show that stream was delivered through SMOXY. |

|||

|

|

|

|

|

| SYSTEM | TEST-FRONTDOOR-04 | High | n/a |

|

|

Fetching a large video stream. |

||

|

Verify that your [CLIENT] uses proxy settings [FRONTDOOR]. Enter [LARGE_STREAM] location to your [CLIENT]. SMOXY returns with a proxy authentication window. Enter [USER_ACCOUNT] parameters. |

|||

|

The video stream is delivered, and it is displayed. [LOGS] show that stream was delivered through SMOXY. |

|||

|

|

|

|

|

| SYSTEM | TEST-ENGINE-01 | High | FUNC-CONTROL-01, FUNC-CONTROL-03 |

|

|

Connection to the external user profile storage is tested. SMOXY is able to retrieve the profile corresponding to the user's identity, when a request originating from this user is received. |

||

|

Go to [CONF_UTIL]. Log in with [USER_ACCOUNT]. Modify the profile to accept only black-and-white streams. Save the modifications. Fetch [COLOR_STREAM]. |

|||

|

A black-and-white stream must be received. SMOXY logs show that stream was delivered through it. |

|||

|

|

|

|

|

| SYSTEM | TEST-ENGINE-02 | High | FUNC-ENGINE-01, FUNC-CONTROL-03 |

|

|

Conversion from one video stream format to another, avi to mpeg, is tested. In user profile, client is required to receive all streams in mpeg format. This implies that SMOXY must convert the out coming stream to mpeg regardless of the incoming stream type. |

||

|

Fetch a [AVI_STREAM]. |

|||

|

The user receives a mpeg stream. This is visible from RealPlayer and [LOGS] show that stream was delivered through it. |

|||

|

|

|

|

|

| SYSTEM | TEST-ENGINE-03 | High | n/a |

|

|

The recovery from broken stream is tested. A proper stream has been modified to contain malicious crap. |

||

|

Fetch a [INCORRECT_STREAM]. |

|||

|

SMOXY must recognize the error situation and recover. |

|||

|

|

|

|

|

| SYSTEM | TEST-PORTAL-01 | High | FUNC-PORTAL-01 |

|

|

The tester person is provided with a web page that contains a single link pointing to a stream. The link has a special format that actually connects to a SMOXY frontdoor, but this is not visible to the tester. Selecting the link results the stream to be delivered to the client. The stream is delivered as it is. |

||

|

Verify that your [CLIENT] does not use proxy settings. Use your browser [CLIENT] to select the single link from web page [PORTAL] that points to [SMALL_STREAM]. |

|||

|

The video stream is delivered, and it is displayed. [LOGS] show that stream was delivered through SMOXY. |

|||

|

|

|

|

|

| SYSTEM | TEST-DIRECTUI-01 | High | FUNC-DIRECTUI-01 |

|

|

The tester person is provided with a web page [DIRECTUI] that contains an input field. The tester enters [SMALL_STREAM] location. Information is posted to a SMOXY frontdoor, but this is not visible to the tester. The stream is delivered (unmodified) to the client and displayed. |

||

|

Verify that your [CLIENT] does not use proxy settings. Go to [DIRECTUI]. Fetch a video stream by giving an url and choose 'Get it'. |

|||

|

The user gets the requested stream. [LOGS] show that stream was delivered through SMOXY. |

|||

The first set of integration tests the proprietary packet protocol between a Frontdoor and Smoxy Engine. This is a traditional client-server setup with Frontdoor and Engine, respectively.

The second set of tests verifies the functionality when Database is integrated with Frontdoor and Engine.

The first set of test cases includes the testing Smoxy Engine, which consist of modules network.h, connection.h, parallel.h, stream.h. For this purpose, a test implementation of Frontdoor was created. The Frontdoor sends messages to Smoxy Engine with the packet protocol (PP). The purpose of the created FrontDoor is to test the strenght of the server side implemention of PP. Also the Smoxy's capability to handle parallel requests is tested. See chapter 4.1.1.1 in [TECHSPEC] for details.

|

|

|

|

|

| INTEGRAT | TEST-PP-01 | Med | n/a |

|

|

Successful client identification. |

||

|

Send a [VALID_USERID] using packet of type EPP_USERID. |

|||

|

Smoxy must respond with packet of type EPP_OK. |

|||

|

|

|

|

|

| INTEGRAT | TEST-PP-02 | Med | n/a |

|

|

Unsuccessful client identification. |

||

|

Send an [INVALID_USERID] using packet of type EPP_USERID. |

|||

|

Smoxy must respond with packet of type EPP_ERROR. |

|||

|

|

|

|

|

| INTEGRAT | TEST-PP-03 | Med | n/a |

|

|

Send a query to Smoxy to deliver a stream idenfied with a valid host:port combination and request message. |

||

|

Send a packet of type EPP_STREAM_LOCATION containing [VALIDCS:PORT]. Send a second packet of type EPP_STREAM_REQUEST containig a valid HTTP request. |

|||

|

Smoxy must respond with EPP_STREAM_DATA packets. The last packet of transmission must be EPP_EOT. |

|||

|

|

|

|

|

| INTEGRAT | TEST-PP-04 | Med | n/a |

|

|

Send a query to Smoxy to deliver a stream idenfied with an invalid host:port combination and request message. |

||

|

Send a packet of type EPP_STREAM_LOCATION containing [INVALIDCS:PORT]. Send a second packet of type EPP_STREAM_REQUEST containig a valid HTTP request. |

|||

|

Smoxy must respond with packet of type EPP_ERROR. |

|||

|

|

|

|

|

| INTEGRAT | TEST-PP-05 | Med | n/a |

|

|

Send a stream to Smoxy that it can handle. |

||

|

Send a packet of type EPP_STREAM_DIRECTLY to Smoxy containing followed by a packet of type EPP_STREAM_TYPE with data [KNOWN_STREAM]. |

|||

|

Smoxy must respond with packet of type EPP_OK. |

|||

|

|

|

|

|

| INTEGRAT | TEST-PP-06 | Med | n/a |

|

|

Send a stream to Smoxy that it cannot handle. |

||

|

Send a packet of type EPP_STREAM_DIRECTLY to Smoxy containing followed by a packet of type EPP_STREAM_TYPE with data [UNKNOWN_STREAM]. |

|||

|

Smoxy must respond with packet of type EPP_ERROR. |

|||

|

|

|

|

|

| INTEGRAT | TEST-PP-07 | Med | n/a |

|

|

Perform TEST-PP-05. Continue sending stream data until end of stream. |

||

|

Send EPP_STREAM_DATA packets and terminate with EPP_EOT packet. |

|||

|

Smoxy must respond with EPP_STREAM_DATA packets. The last packet of transmission must be EPP_EOT. |

|||

|

|

|

|

|

| INTEGRAT | TEST-PP-08 | Med | n/a |

|

|

Perform TEST-PP-05. Send user identification, which violates the protocol messaging sequence. |

||

|

Send EPP_USERID. |

|||

|

Smoxy must respond with packet of type EPP_ERROR. |

|||

|

|

|

|

|

| INTEGRAT | TEST-PP-09 | Med | n/a |

|

|

Use two frontdoors to run parallel requests. |

||

|

Run test TEST-PP-05 with two frontdoors. |

|||

|

Smoxy must respond with EPP_STREAM_DATA packets to both frontdoors. The last packet of each transmission must be EPP_EOT. |

|||

Not available yet due to the pending implementation of Database in SMOXY.

Testing is based on creating test programs using the functions defined in module header files. Both tested modules are used for communicating over TCP sockets and form the core of the SMOXY system. A straight-forward server implementation was created to act as a peer for both modules. With this setup, it was e.g. possible to test the transparency of the modules. This means, that when sending data to server that echoes all received data back, it can be verified that data remained unchanged.

The test cases are automated with scripts and presented in the Test Report [TESTREPORT] document.

[1] There MUST NOT be system tests of priority "HIGH" failing (in chapter 6).

[2] All system test cases MUST be executed at least once in phase T4.

System testing is accepted by the project manager. This is enough, since one of the acceptance tests (approved by the customer) requires that the results of system testing are presented.

Phase T4: The exit criteria for integration testing is that 80% of test cases must be executed successfully.

Phase T4: The exit criteria for module testing is that 80% of test cases must be executed successfully.

[2] Testing does not find critical defects.

Countermeasure: Use V-model which starts from the bottom to the up.

Divide testing to manageable tasks. Communicate actively with other team

members and try to identify critical parts. Use iterative approach.

[3] Testing tools are missing or testers don't know how to use them.

Countermeasure: Select tools in advance and arrange training.

[4] The test manager takes one month vacation (the risk has already materialized).

Countermeasure: The testing is rescheduled, and the testing effort

in phase T4 is doubled.

Phase T3: The person responsible for testing (Eero Tuomikoski) was on a vacation the month of January 2001, therefore the schedule has been changed from the previous one presented at the end of phase T2. Due to this rescheduling the T4 phase is heavily loaded with testing.

Phase T4: The decision to automize all integratation and module test cases took more time than estimated in the beginning of the phase. This explains the relatively small total number of tests. Especially on integration testing, effort has been concentrated on issues which are expected to more likely fail than success. This explains the lack of test cases for functionalities, which are well-proofed to be correct. These are addressed again in phase LU. The second realized risk was that Database design and implementation was not available early enough to give time to prepare the test cases for that.

| [DEMODOC] | SMOXY Phase T2 Demo Documentation |

| http://amnesia.tky.hut.fi/smoxy/t2/eTLA_DemoDoc_T2.html | |

| [FUNCSPEC] | SMOXY Functional Specification |

| http://amnesia.tky.hut.fi/smoxy/t4/eTLA_FuncSpec_T4.html | |

| [GLOSSARY] | SMOXY Glossary |

| http://amnesia.tky.hut.fi/smoxy/t3/glos.html | |

| [PROJPLAN] | SMOXY Project Plan |

| http://amnesia.tky.hut.fi/smoxy/t4/eTLA_PPlan_T4.html | |

| [REQSPEC] | SMOXY Requirement Specification |

| http://amnesia.tky.hut.fi/smoxy/t3/eTLA_reqs_T3.html | |

| [SMOXY] | SMOXY Home Page |

| http://amnesia.tky.hut.fi/smoxy/ | |

| [TECHSPEC] | SMOXY Technical Specification |

| http://amnesia.tky.hut.fi/smoxy/t4/eTLA_TechSpec_T4.html | |

| [TESTREPORT] | SMOXY Test Report |

| http://amnesia.tky.hut.fi/smoxy/t4/eTLA_TestReport_T4.html |